Coupling of machine learning methods to improve estimation of ground coverage from unmanned aerial vehicle (UAV) imagery for high-throughput phenotyping of crops

Improvement of ground coverage using tree-based sub-pixel classification

Improvement of ground coverage using tree-based sub-pixel classification

Abstract

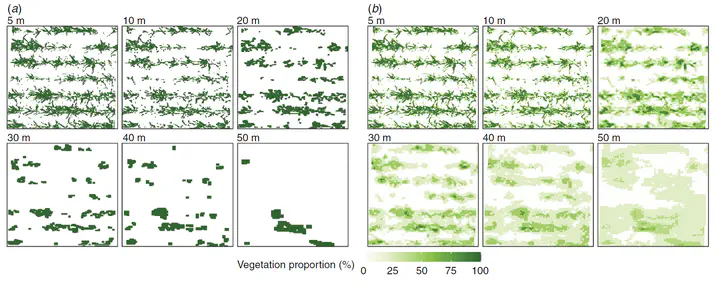

Ground coverage (GC) allows monitoring of crop growth and development and is normally estimated as the ratio of vegetation to the total pixels from nadir images captured by visible-spectrum (RGB) cameras. The accuracy of estimated GC can be significantly impacted by the effect of ‘mixed pixels’, which is related to the spatial resolution of the imagery as determined by flight altitude, camera resolution and crop characteristics (fine vs coarse textures). In this study, a two-step machine learning method was developed to improve the accuracy of GC of wheat (Triticum aestivum L.) estimated from coarse-resolution RGB images captured by an unmanned aerial vehicle (UAV) at higher altitudes. The classification tree-based per-pixel segmentation (PPS) method was first used to segment fine-resolution reference images into vegetation and background pixels. The reference and their segmented images were degraded to the target coarse spatial resolution. These degraded images were then used to generate a training dataset for a regression tree-based model to establish the sub-pixel classification (SPC) method. The newly proposed method (i.e. PPS-SPC) was evaluated with six synthetic and four real UAV image sets (SISs and RISs, respectively) with different spatial resolutions. Overall, the results demonstrated that the PPS-SPC method obtained higher accuracy of GC in both SISs and RISs comparing to PPS method, with root mean squared errors (RMSE) of less than 6% and relative RMSE (RRMSE) of less than 11% for SISs, and RMSE of less than 5% and RRMSE of less than 35% for RISs. The proposed PPS-SPC method can be potentially applied in plant breeding and precision agriculture to balance accuracy requirement and UAV flight height in the limited battery life and operation time.